Friday, February 27, 2015

Thursday, February 19, 2015

DB2 WITH TSA

DB2 HADR AUTOMATION WITH TSA

Environment:Nodes: Two servers

OS: REDHAT 6.6 64 Bit

DB2: V10.1 FP 3

Network: 1 Ethernet card (NIC) on each server

Installing TSA:

By default TSA is included in DB2 binary except for express edition. Else do a custom installation to install TSA using db2 installer or installSAM.

Run the TSA installSAM command to start installation this will check for prerequisite and generates an output file in /tmp directory with name "installSAM.*.log" if you run into issues for installation check this file to find the dependencies.

Note: If you install TSA with installSAM command there are possibilities of DB2 automation scripts not installing in the path "/opt/ibm/db2/V10.1/install/tsamp" Check the errors section to solve.

Setting up DB2 HADR:

1. Create the database: (For this example I'm using DB2 "SAMPLE" database provided with it.)

2. Update the logarchmeth1 to archive logs for HADR replay.

3. Add HADR related host parameters

4. Add HADR related service parameters

5. Change HADR synchronisation mode to SYNC

6. Backup database and restore it on the STANDBY server

7. Modify the HADR related parameters to reflect the standby

8. Sync the db2nodes.cfg, uname -n, hostname to show same hostname either in short name or FQDN, don't mix FQDN with short name as TSA will have problems

8. Start the standby

9. Start the primary.

Setting up TSA:

Prepnode:

Run the prepnode command on each node before configuring db2haicu.

NOTE:Run the db2haicu on standby server first then on primary server.

Setting up rsh between the servers:

TSA commands use the rsh service to run the commands on the remote secondary servers, so configure the rsh between the servers, follow the link provided below to set up rsh:

http://dbaworks.blogspot.in/2015/08/setting-up-rsh-on-two-redhat-6-servers.html

Errors faced due to rsh on BOTH the servers:

The below errors where faced since DB2RSHCMD is not configured properly in DB2, set this on BOTH the servers.

2015-08-06-00.00.00.147304+330 E14063538E425 LEVEL: Error (OS)

PID : 22251 TID : 46989377371648 PROC : db2start

INSTANCE: db2inst1 NODE : 000

HOSTNAME: redhat_2.localdomain

FUNCTION: DB2 UDB, oper system services, sqloRemoteShell, probe:50

CALLED : OS, -, execvp OSERR: ENOENT (2)

MESSAGE : Error invoking remote shell program.

DATA #1 : String, 3 bytes

RSH

2015-08-06-00.00.01.160122+330 E14063964E773 LEVEL: Warning

PID : 22190 TID : 46989377371648 PROC : db2start

INSTANCE: db2inst1 NODE : 000

HOSTNAME: redhat_2.localdomain

FUNCTION: DB2 UDB, oper system services, sqloPdbInitializeRemoteCommand, probe:110

MESSAGE : ZRC=0x810F0012=-2129723374=SQLO_COMM_ERR "Communication error"

DATA #1 : String, 348 bytes

The remote shell program terminated prematurely. The most likely causes are either that the DB2RSHCMD registry variable is set to an invalid setting, or the remote command program failed to authenticate. It

can also be the remote daemon is not completely started up yet to handle the request. This attempt will retry a few times before giving up.

DATA #2 : String, 3 bytes

RSH

2015-08-06-00.00.01.160611+330 E14064738E526 LEVEL: Error

PID : 22190 TID : 46989377371648 PROC : db2start

INSTANCE: db2inst1 NODE : 000

HOSTNAME: redhat_2.localdomain

FUNCTION: DB2 UDB, oper system services, sqloPdbInitializeRemoteCommand, probe:200

MESSAGE : ZRC=0x810F0012=-2129723374=SQLO_COMM_ERR "Communication error"

DATA #1 : String, 20 bytes

redhat_2.localdomain

DATA #2 : String, 8 bytes

redhat_2

DATA #3 : String, 51 bytes

No diagnostics available from remote shell program.

2015-08-06-00.00.01.160844+330 I14065265E1203 LEVEL: Event

PID : 22190 TID : 46989377371648 PROC : db2start

INSTANCE: db2inst1 NODE : 000

HOSTNAME: redhat_2.localdomain

FUNCTION: DB2 UDB, oper system services, sqloPdbOpenConnection, probe:10

MESSAGE : ZRC=0x810F0012=-2129723374=SQLO_COMM_ERR "Communication error"

DATA #1 : String, 27 bytes

Retry the connection again.

DATA #2 : unsigned integer, 8 bytes

12

DATA #3 : unsigned integer, 8 bytes

30

CALLSTCK: (Static functions may not be resolved correctly, as they are resolved to the nearest symbol)

[0] 0x00002ABC826E75FE pdLog + 0x38E

[1] 0x00002ABC833F5995 sqloPdbOpenConnection + 0x2D5

[2] 0x00002ABC833F5576 sqloPdbStartStopNode + 0x146

[3] 0x00002ABC829A2B1F _Z20sqlePdbStartStopNodeiP13sqlo_nodelinePcS1_tS1_mP25sqlo_start_stop_node_info + 0xEF

[4] 0x00002ABC82995699 _Z18sqleIssueStartStopiPvPcS0_P9sqlf_kcfdP18SQLE_INTERNAL_ARGSjjP5sqlca + 0x20B9

[5] 0x00002ABC8299238A _Z20sqleProcessStartStopiPvP18SQLE_INTERNAL_ARGSP9sqlf_kcfdPcjjP5sqlca + 0x3CA

[6] 0x00000000004061FE main + 0x23EE

[7] 0x0000003D03E1ED5D __libc_start_main + 0xFD

[8] 0x0000000000403D79 __gxx_personality_v0 + 0x1C9

Solution:

Set the DB2RSHCMD registry variable to the full path of rsh, for example:

To find rsh full path:

[root@redhat_2 ~]# which rsh

/usr/bin/rsh

Now set that above path to DB2RSHCMD registry profile.

[db2inst1@redhat_1 ~]$ db2set DB2RSHCMD=/usr/bin/rsh

Now you can start DB2 hadr from TSA by using chrg command.

- Operating system: UNIX, Linux

- Default=rsh (remsh on HP-UX), Values are a full path name to rsh, remsh, or ssh

- By default, DB2 database system uses rsh as the communication protocol when starting remote database partitions and with the db2_all script to run utilities and commands on all database partitions. For example, setting this registry variable to the full path name for ssh causes DB2 database products to use ssh as the communication protocol for the requested running of the utilities and commands. It may also be set to the full path name of a script that invokes the remote command program with appropriate default parameters. This variable is only required for partitioned databases, or for single-partition environments where the db2start command is run from a different server than where the DB2 product was installed and for rsh or ssh dependant utilities that have the capability of starting, stopping or monitoring a DB2 instance, such as db2gcf. The instance owner must be able to use the specified remote shell program to log in from each DB2 database node to each other DB2 database node, without being prompted for any additional verification or authentication (that is, passwords or password phrases).

The below link talks about redhat bug, but its not related to the above errors:

http://www-01.ibm.com/support/docview.wss?uid=swg21637163

If you set up rsh properly proceed further.

On standby server first:

1. Login as ROOT and run "export CT_MANAGEMENT_SCOPE=2" on each node so that any TSA commands executed will be running on both nodes than on the node itself.

2. Run preprpnode, if it completes successfully proceed to next step

3. As instance issue db2haicu (DB2 HIGH AVAILABILITY INSTANCE CONFIGURATION UTILITY)

4. Choose 1 to create cluster domain

5. Enter a valid name for cluster domain.

6. Enter number of nodes in the cluster domain. for this example it is 2.

7. Enter the host name for the nodes in the cluster domain: (check the output from commands: hostname, uname -n, db2nodes.cfg ) then give the proper hostname else cluster creation will fail. If it fails then sync the hostname to be same as shown in the above command's output.

8. Enter 1 to create the domain

9. Enter 1 to create the quorum enter the IP address for quorum for this example use the GATEWAY IP address 192.168.5.1 which is pingable from both the nodes.

10. Enter 1 for creating Network Interface cards

11. Add the network card eth0 to db2_public_network on both the nodes

12. Once network completes, the db2haicu will show the cluster manager options to use for this example use 1 i.e TSA

13. Enter 1 to validate and automate HADR

On primary server:

Once db2haicu completes on standby run that in primary, db2haicu automatically detects the HADR enabled database. Enter 1 to validate and automate HADR in primary.

After db2haicu completes you can check the status of the cluster using the lssam command.

Check cluster domain with TSA commands:

- You can check the cluster created by using TSA lsrpdomain command.

- You can start/stop the cluster domain with startrpdomain/stoprpdomain

- List resource groups using lsrg and use lsrg -m option to find which node holds the resource group currently

- List nodes in the cluster and its status using lsrpnode

To change resource group status use:

To Stop: chrg -o offline -s " name = = 'db2_bculinux_NLG_beluga007' "

To Start: chrg -o online -s " name = = 'db2_bculinux_NLG_beluga007' "

To find the cluster service IP ( Floating IP or virtual IP):

lsrsrc -Ab IBM.ServiceIP

To find application resources:

lsrsrc -Ab IBM.Application

To find network resources:

lsrsrc -Ab IBM.NetworkInterface

To find TSA version:

LINK:

http://www-01.ibm.com/support/docview.wss?uid=swg21426022

Use the following command to check the version of Tivoli System Automation for Multiplatforms :

$ /usr/sbin/rsct/bin/samversion

rsa315s006a 3.1.0.6 Jan 27 2010 17:27:20

Use the following command to check the version of Reliable Scalable Cluster Technology

$ /usr/sbin/rsct/install/bin/ctversion -b

rgris002a 2.5.4.2 10022.11:02:07

Errors faced:

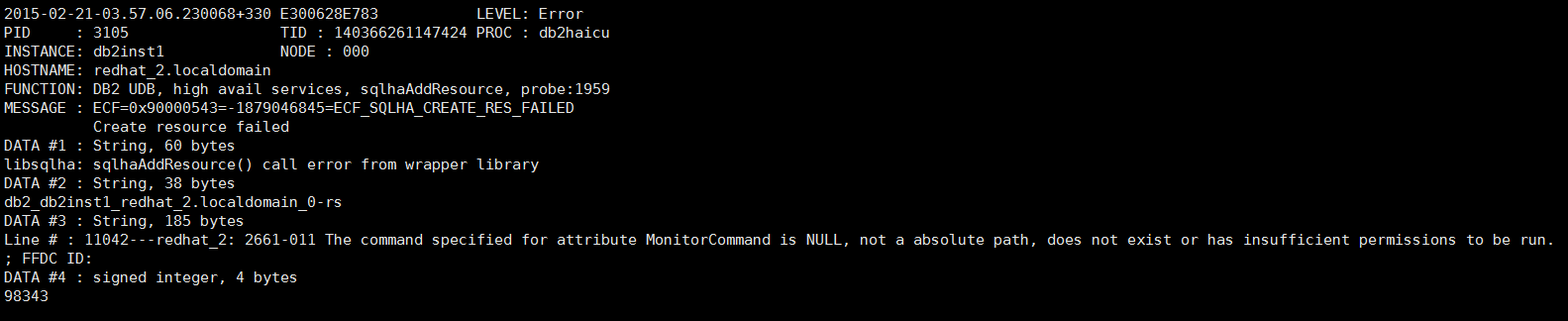

"The command specified for attribute MonitorCommand is NULL, not a absolute path, does not exist or has insufficient permissions to be run."

Failure message:

db2haicu failed with the below error message in db2diag.log while cluster setup:

2015-02-21-03.57.06.230068+330 E300628E783 LEVEL: Error

PID : 3105 TID : 140366261147424 PROC : db2haicu

INSTANCE: db2inst1 NODE : 000

HOSTNAME: redhat_2.localdomain

FUNCTION: DB2 UDB, high avail services, sqlhaAddResource, probe:1959

MESSAGE : ECF=0x90000543=-1879046845=ECF_SQLHA_CREATE_RES_FAILED

Create resource failed

DATA #1 : String, 60 bytes

libsqlha: sqlhaAddResource() call error from wrapper library

DATA #2 : String, 38 bytes

db2_db2inst1_redhat_2.localdomain_0-rs

DATA #3 : String, 185 bytes

Line # : 11042---redhat_2: 2661-011 The command specified for attribute MonitorCommand is NULL, not a absolute path, does not exist or has insufficient permissions to be run.

; FFDC ID:

DATA #4 : signed integer, 4 bytes

98343

Causes:

The DB2 automation scripts are missing from /usr/sbin/rsct/sapolicies on the server.

Solution:

To install the DB2 automation script go the DB2 installation path:

cd /opt/ibm/db2/V10.1/install/tsamp

Issue the below command:

As ROOT:

./db2cptsa

[root@redhat_1 tsamp]# ./db2cptsa

Now the DB2 automation scripts will be available.

Now delete the cluster domain by issuing db2haicu -delete command and recreate the cluster domain.

START/STOP cluster with TSA commands

Links:

START/STOP in TSA HADR:

https://drive.google.com/file/d/0B2ap4DUtQJspbGJDb0hXbGhwc2M/view?usp=sharing

Stopping the servers in cluster:

- Disable Critical Resource Protection Methods to disable nodes getting restarted due to start/stop by cluster software:

List current value of CritRsrcProtMethod:

lsrsrc -c IBM.PeerNode CritRsrcProtMethod

To change the value to 5 disables critical resource protection:

chrsrc -c IBM.PeerNode CritRsrcProtMethod=5 - Bring down the cluster resource group for the HA database.

chrg -o offline db2_db2inst1_db2inst1_SAMPLE-rg - Bring down the cluster resource group for the primary server:

chrg -o offline db2_db2inst1_HADR_1_0_rg - Bring down the cluster resource group for standby server:

chrg -o offline db2_db2inst1_HADR_2_0_rg - Stop the domain:

stoprpdomain hadr - You can shutdown the standby server, TSAMP will not bring it online.

- Check the status of the domain:

lsrpdomain - If its down start the domain first:

startrpdomain - Start the cluster resource groups for each node:

chrg -o online db2_db2inst1_HADR_1_0_rg

chrg -o online db2_db2inst1_HADR_2_0_rg

(or)

chrg -o online -s "Name like 'db2_db2inst1_HADR_%'"

This command will start the DB2 and brings up the databases and reintegrates HADR if it is in PEER state. - After cluster resource groups come online, bring the cluster resource group for database online:

chrg -o online db2_db2inst1_db2inst1_SAMPLE-rg

- Check whether the databases are up or not.

- Use lsrg -m command to list which node the resources are residing.

List dependencies:

When you cluster an instance using db2haicu, TSA/RSCT resources are created as well as dependencies between them.

To see dependencies in your cluster you can use the

lsrel -Ab

The above command will list the dependencies. Here is the example:

An example of a dependency is the one created between the instance resource and the public network. If the public network goes down, clients can no longer connect to the database so a failover is necessary. Check if the public network is up.

DB2Diag.log:

Messages related to the db2haicu and integrated HA code are prefixed by 'sqlha'.

When you cluster an instance using db2haicu, TSA/RSCT resources are created as well as dependencies between them.

To see dependencies in your cluster you can use the

lsrel command.lsrel -Ab

The above command will list the dependencies. Here is the example:

db2inst1@host1:~> lsrel -Ab

Displaying Managed Relationship Information:

All Attributes

Managed Relationship 1:

Class:Resource:Node[Source] = IBM.Application:db2_db2inst1_host1_0-rs

Class:Resource:Node[Target] = {IBM.Equivalency:db2_public_network_0}

Relationship = DependsOn

Conditional = NoCondition

Name = db2_db2inst1_host1_0-

rs_DependsOn_db2_public_network_0-rel

ActivePeerDomain = hadr_domain

ConfigValidity =

An example of a dependency is the one created between the instance resource and the public network. If the public network goes down, clients can no longer connect to the database so a failover is necessary. Check if the public network is up.

DB2Diag.log:

Messages related to the db2haicu and integrated HA code are prefixed by 'sqlha'.

DB2 scripts locations:

"/usr/sbin/rsct/sapolicies/db2".

The name of each script contains the current DB2 version. If the version is 9.7 then the scripts will start with suffix db2V9.7.

The scripts are as follows:

Instance monitor script (db2V97_monitor.ksh)

The instance monitor script is responsible for monitoring the instance status.

By default, TSA/RSCT calls this on a specified interval on both nodes in the HADR pair.

Based on this, the OpState of the instance resources are updated.

The instance resources are db2_db2inst1_host1_0-rs and db2_db2inst1_host2_0-rs.

Return codes:

OpState: online

Return code: 1

Meaning (DB2 perspective): Instance is started.

OpState: Offline

Return code: 2

Meaning (DB2 perspective): Instance not started or having problem.

Instance start script (db2V97_start.ksh)

The instance start script is responsible for starting up the DB2 instance and activating its databases.

If necessary, the start script can also perform reintegration.

Instance stop script (db2V97_stop.ksh)

The instance stop script is responsible for stopping the instance.

It is used to deliberately stop the instance in the case where something has gone wrong on the respective host.

HADR monitor script (hadrV97_monitor.ksh)

The HADR monitor script is responsible for monitoring HADR database status.

By default, TSA/RSCT calls this script every 29 seconds on both hosts in the HADR pair.

Based on this, the OpState of the HADR resources are updated.

Return codes:

OpState: online

Return code: 1

Meaning (DB2 perspective): HADR is primary

OpState: Offline

Return code: 2

Meaning (DB2 perspective): HADR is standby or having problem.

HADR start script (hadrV97_start.ksh)

The HADR start script is responsible for starting HADR on an existing standby database, to have it assume a primary role.

HADR stop script (hadrV97_stop.ksh)

The HADR stop script is responsible for stopping HADR. It is used to deliberately stop HADR in the case where something has gone wrong on the respective host.

syslog

syslog shows a history of resource management by TSA/RSCT.

On Linux systems, syslog can be found under /var/log/messages/syslog.out.

On AIX systems, syslog can be found under /tmp/syslog.out.

To modify this, look in syslog.conf. This file can be found under /etc.

lsrsrc

The lsrsrc command lists the attributes of the resources.

lsrsc is a useful command to understand the inner workings of various resources belonging to a particular resource class.

The most common classes you would work with are:

IBM.Application

IBM.ServiceIP

IBM.Test

These classes are created by TSA/RSCT and act as a doorway for other products to interact with the cluster manager.

Cluster resource

Most commonly, cluster resources belong to the class IBM.Application.

Cluster resources are created to represent entities such as DB2 instance or HADR database.

Flag resource

Flag resources belong to the class IBM.Test. Flag resources are transient in nature.

They are created by DB2 code and used to communicate ongoing action to TSA.

At the end of the action they should be cleaned up automatically. If a flag is left behind, this is an indicator that something went wrong.

It can be checked as follows:

lsrsrc IBM.Test

getsadata

This is the equivalent of db2support from theTSA/RSCT perspective.

To gather maximum data, run getsadata with the all flag.

This utility must be run as root. IBM Support can guide you on this.

Peer window expires

If the

HADR_PEER_WINDOW configuration parameter is too small, it may expire by the time the cluster manager tries to issue a takeover from the standby.

Configuration issues with db2haicu

Host name

The integrated HA solution relies on various sources for host name.

To find out how TSA/RSCT views the host name, issue

lsrpnode.To find out how DB2 views the host name, issue

uname. (Note: It is good practice to keep uname host name consistent with the output of host name.)

IBM link

http://www-01.ibm.com/support/docview.wss?uid=swg21570815

For more about read getting started with TSA guide below:

http://www.ibm.com/developerworks/tivoli/library/tv-tivoli-system-automation/

References:

Recommendations for TSA controlled DB2 HADR:

http://www-01.ibm.com/support/docview.wss?uid=swg21624179

TSA Recovery Manager log file:

http://www-01.ibm.com/support/docview.wss?uid=swg21625077

TSA information center:

http://www-01.ibm.com/support/knowledgecenter/SSRM2X/welcome

Subscribe to:

Posts (Atom)